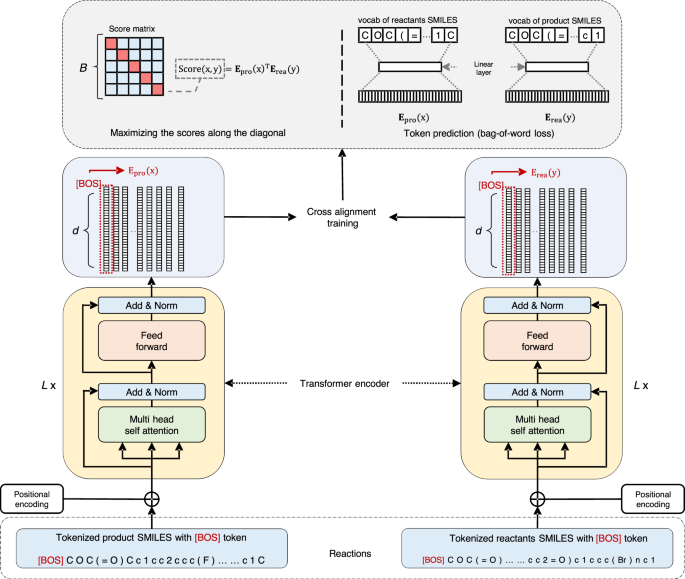

Single-step retrosynthesis prediction by leveraging commonly preserved substructures | Nature Communications

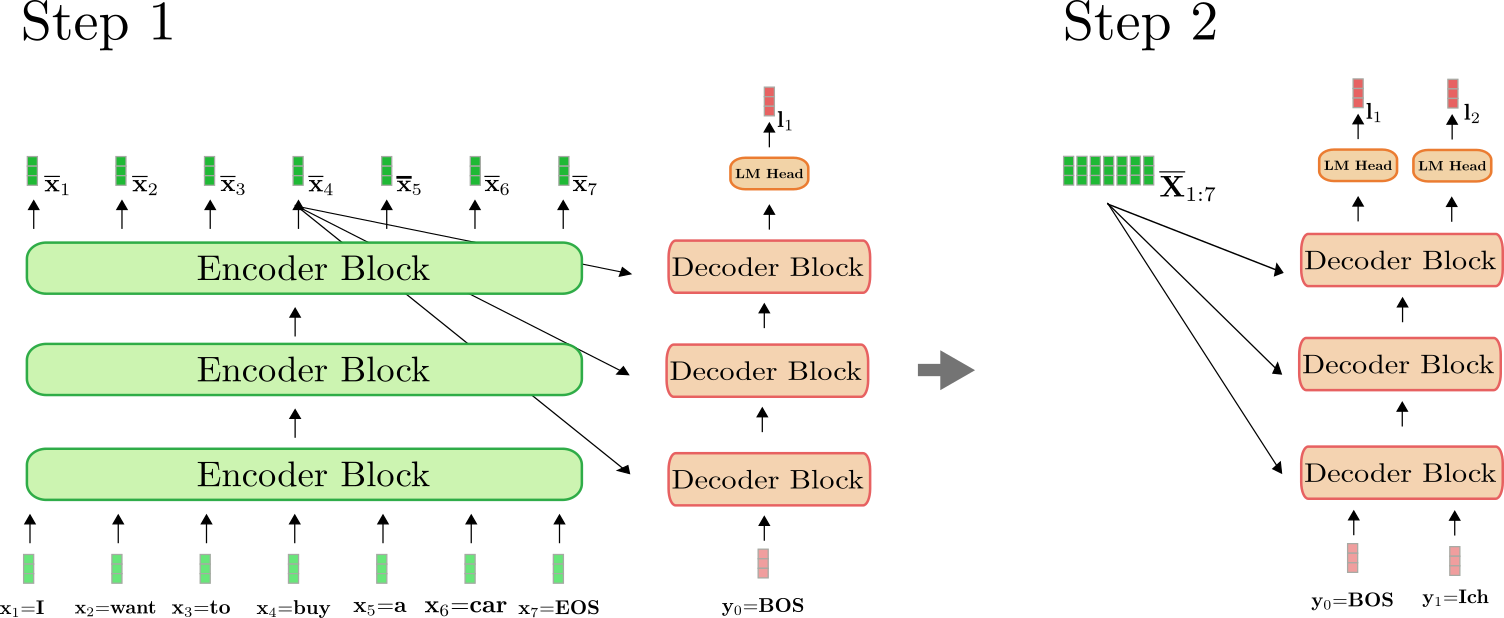

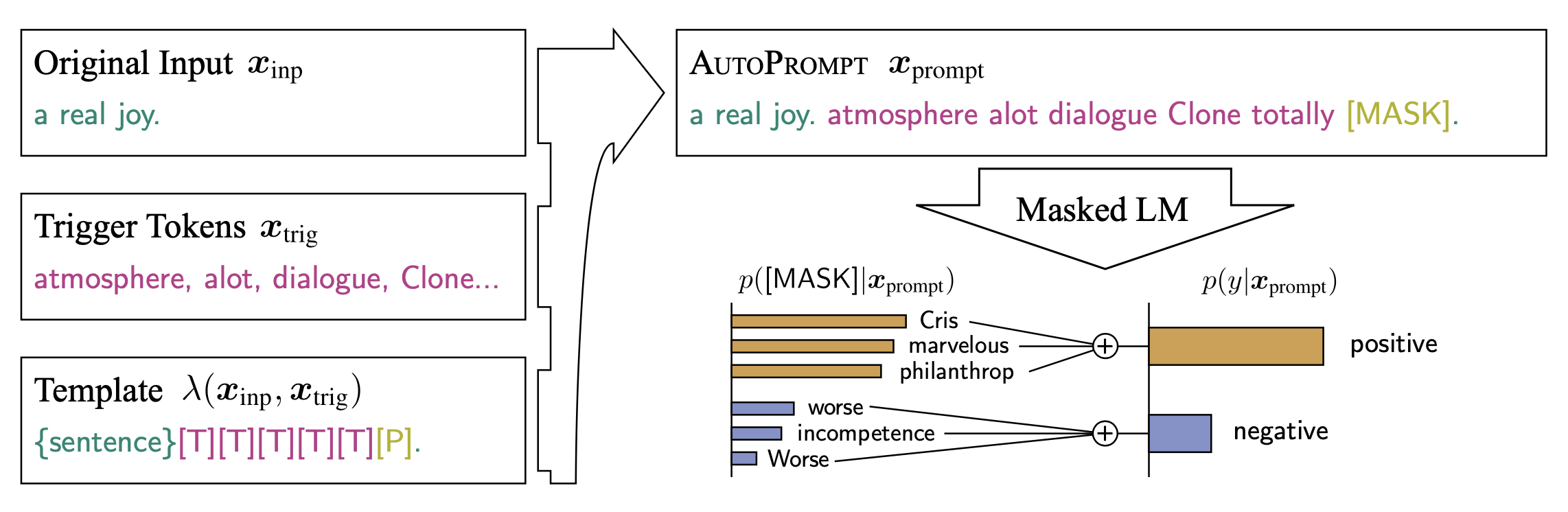

14.1. Tokenized Inputs Outputs - Transformer, T5_EN - Deep Learning Bible - 3. Natural Language Processing - Eng.

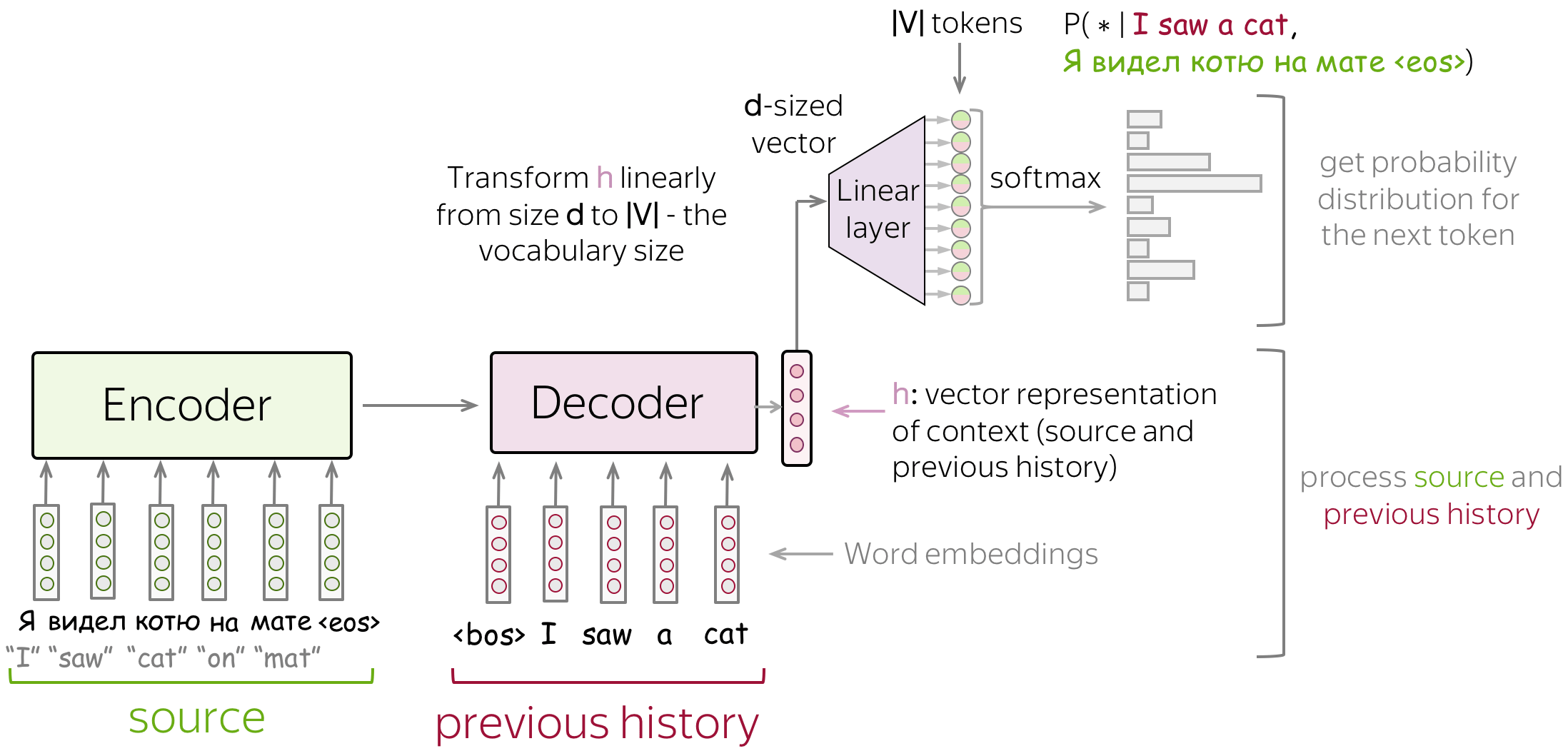

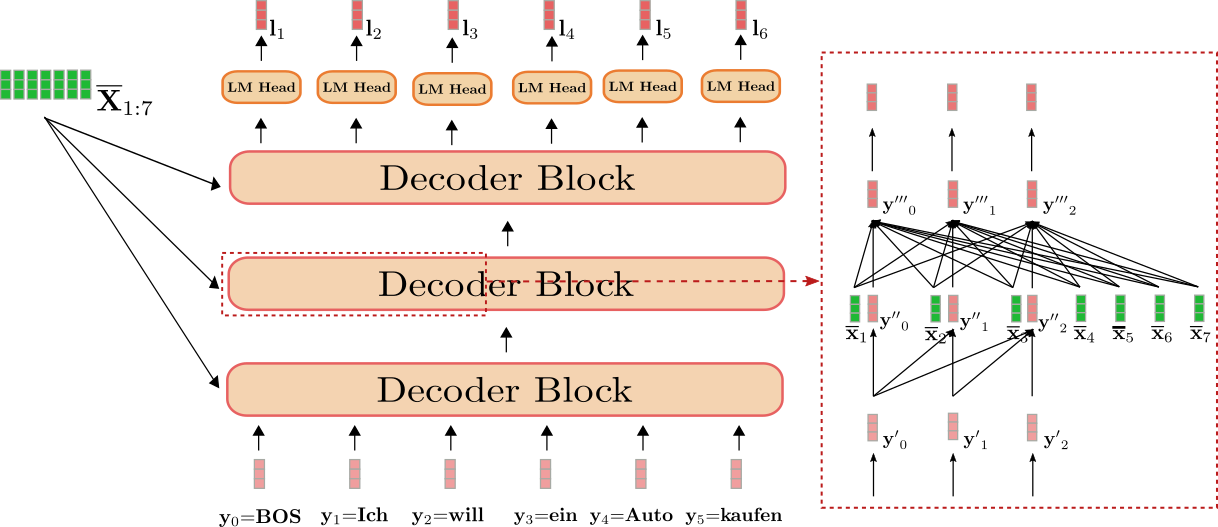

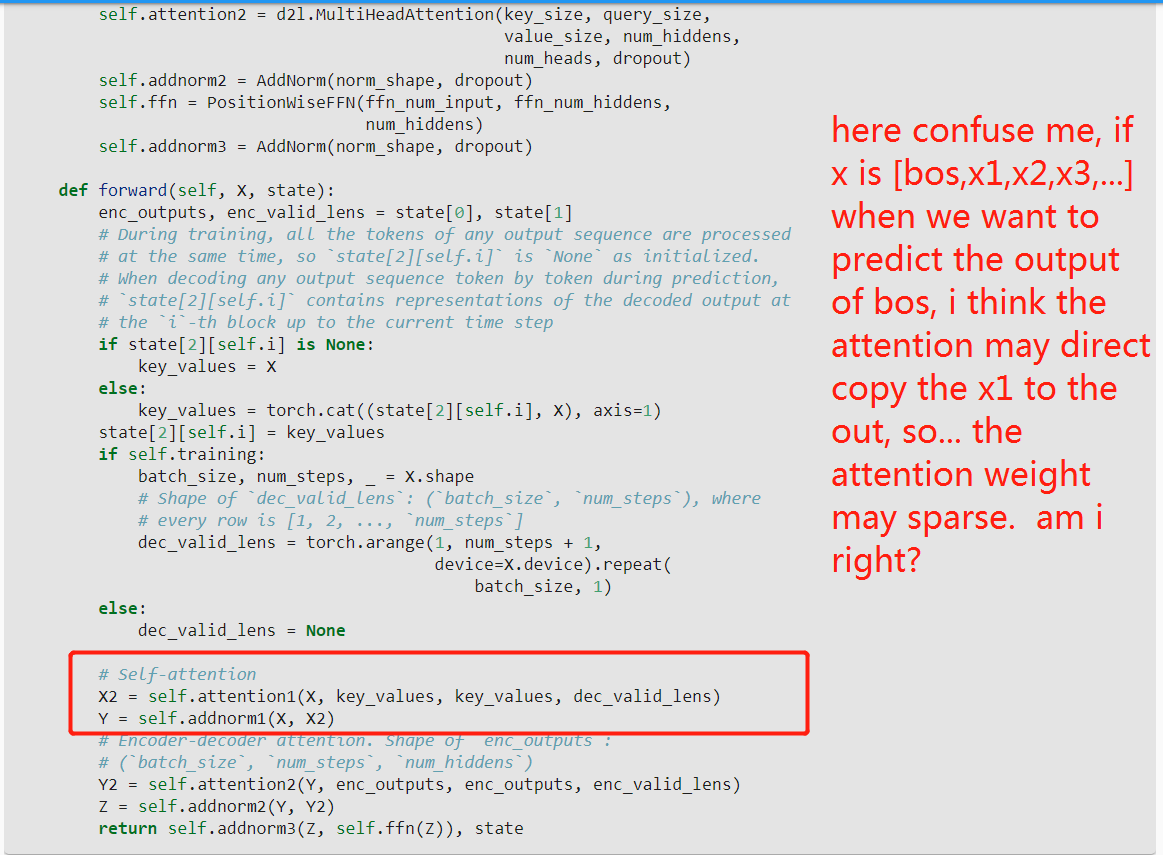

10.7. Encoder-Decoder Seq2Seq for Machine Translation — Dive into Deep Learning 1.0.0-beta0 documentation

Zeta Alpha on Twitter: "🎉Trends in AI February 2023 is here🎉 And well it's been one of the busiest months we remember in AI: @google and @microsoft racing to bring LLMs to

An open-source natural language processing toolkit to support software development: addressing automatic bug detection, code sum

Sustainability | Free Full-Text | Design and Verification of Process Discovery Based on NLP Approach and Visualization for Manufacturing Industry

Sustainability | Free Full-Text | Design and Verification of Process Discovery Based on NLP Approach and Visualization for Manufacturing Industry

![Transformer [59], the encoder-decoder architecture we use for the CQR... | Download Scientific Diagram Transformer [59], the encoder-decoder architecture we use for the CQR... | Download Scientific Diagram](https://www.researchgate.net/publication/355793489/figure/fig2/AS:1162635886235648@1654205404504/Transformer-59-the-encoder-decoder-architecture-we-use-for-the-CQR-task-in-this-work.png)

![How to use [HuggingFace's] Transformers Pre-Trained tokenizers? | by Ala Alam Falaki | Medium How to use [HuggingFace's] Transformers Pre-Trained tokenizers? | by Ala Alam Falaki | Medium](https://miro.medium.com/v2/resize:fit:1400/1*RcSo3UpBTorMI6YJvVizSg.jpeg)